For projects getting off the ground, the table creation feature (Schema) isn’t really a nice addition, its an absolute necessity. And I think it could use some refinement.

Use case:

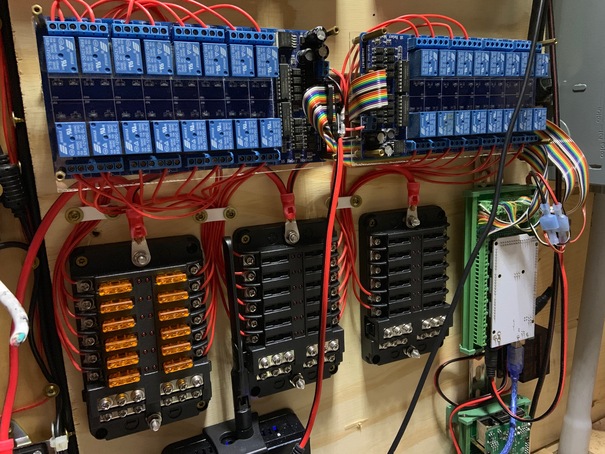

I have a school bus I’m converting into a tiny house/motorhome/etc, have been for a couple years. Its close to done, and I have mostly DC appliances, all are controllable via relays setup on an Arduino board. I use momentary push buttons for my light switches with a total of 9 zones.

Arduino cannot reliably sense simple button pushes without a resistor, so I’ve instead opted to use a DIY joystick kit to sense the button presses. HRORM’s job in this context is to capture any and all events.

Architecture: I’m trying to integrate HRORM into a CDI deployment (thorntail).

Problem: HRORM starts with the assumption you have the necessary tables and columns. This is ill suited when booting a project from scratch using an embedded-database approach. H2 is actually a fairly decent datastore with all the necessary features you’d expect. The current year argument is strong here- there’s quite a few database technologies out there that you really can hit the ground running with zero configuration, it really kinda sucks that the most robust datastores largely lack this.

A flow like:

if schema !exists AND I should create schemas ->

create schema;

if table !exists AND I should create tables -> // each table

create table;

if column !exists AND I should create columns // each column

alter table create column

if column type !matches expected type AND I should alter columns

alter table alter column

Where “I should” could be dao flags like:

daoBuilder.createSchema(true).createTables(true).createColumns(true).alterColumns(true).build(connection).

Dao would need to validate the schema at build time if any of these were true. .build(connection) could also take a Schema object, or list of Descriptors to take into consideration for validation.

None of this is to imply any of this is super-simple or in scope. A baby step in this direction would be to modify Schema.java to conditionally create things based on presence using INFORMATION_SCHEMA queries ( in the query returned by schema.sql() ). This would avoid an exception running the operation multiple times. You could also have methods in Dao like boolean tableExists(), boolean schemaExists(), etc. Something to prevent the user from having to go back to the Connection object for answers.

A more advanced solution would be for hrorm to ascertain the status of the datastore itself via validation- which could produce a List: CreateTableRemediation, AlterColumnRemediation, etc. Then for each, hrorm checks to see if it is both authorized by the user to act on these steps and supports automatic remediation for the type. Anything it cannot act upon (UnsupportedOperation or otherwise), it would throw an exception containing all steps that needed to be done and could not be acted upon.

Alter is particularly tricky to automate… lets say I change:

.withStringColumn(…) to .withIntegerColumn(…). An AlterColumnRemediation could suggest three courses of action to the user: use .withConvertingStringColumn(…) instead, migrate the data manually or switch (back) to .withStringColumn(…). Nothing HRORM can do automatically. And that’s fine.

My other challenges so far:

Unfortunately, most of the Java Arduino control suites I tried failed miserably on my Mega2560, I had to write my own, causing some headache. Same problem with joystick input- Java libs pertaining to joystick input failed me spectacularly- jinput being the only real option, it cannot detect joystick disconnect, at least on Linux, and fail to detect new connections.

I use joystick input to direct the Java EE app on what zones (sets of relays with a list of acceptable permutations) to cycle by firing a CDI event. I first want to record all events to an h2 database created on the fly using hrorm. This is done so I can handle various types of button presses- long press, short press, double press, etc, an have a log on all activity.